Kerby Anderson

Newspapers learned the hard way that you can’t always trust AI generated content. A syndicated summer supplement that was full of AI lies landed in the Chicago Times and the Philadelphia Inquirer. The author asked AI to generate a list of must-read books for the summer.

Apparently, the author and the editors didn’t “fact check” what the computer spit out.

At least 10 of the books were fake, and they all leaned woke. For example, one phony book centered on a climate scientist who must reconcile her beliefs with her family’s environmental impact. Another fake book explored the issues of class, gender, and shadow economies. And finally, there was a phony book about generative AI in which a programmer supposedly discovered that an AI system developed consciousness and has been secretly influencing global events.

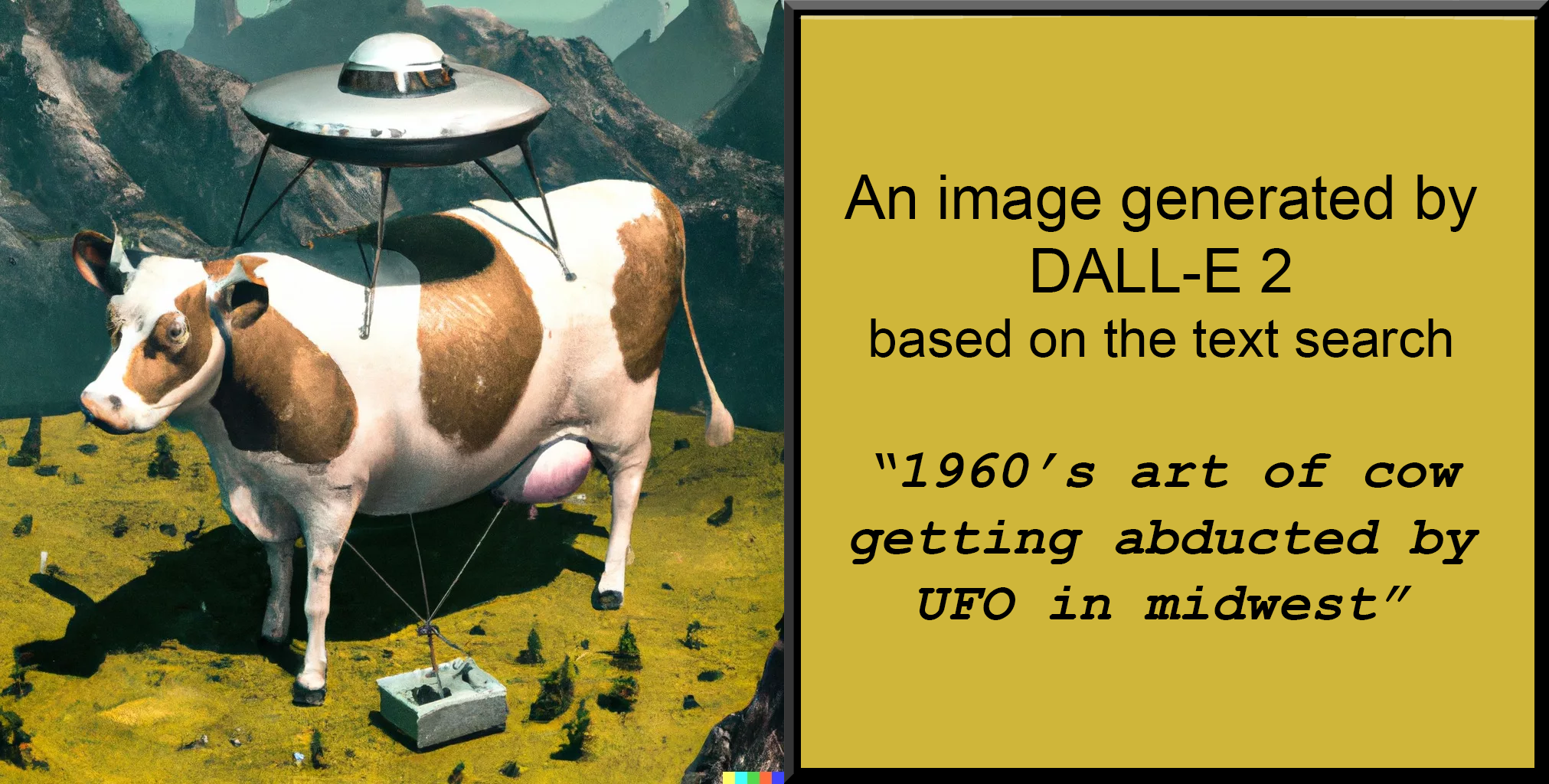

This latest publishing scandal highlights the problem of AI hallucinations. The computer generates information that is not true. It appears that this problem is more acute with computers that use reasoning models in which the computer begins thinking for itself.

Usually, the problem of AI hallucinations is relatively easy to spot. Google’s Bard chatbot incorrectly claimed that the James Webb Space Telescope had captured the world’s first images of a planet outside the solar system. Microsoft’s chat AI admitted to falling in love with users and spying on Bing employees.

Most of the examples have been easy to spot, but the publishing scandal is a reminder that you can’t always trust AI and need to check what it writes. But what will happen when AI produces more sophisticated hallucinations? This is one more reason why all of us should read widely, check our facts, and use discernment.

Listen Online

Listen Online Watch Online

Watch Online Find a Station in Your Area

Find a Station in Your Area

Listen Now

Listen Now Watch Online

Watch Online