Kerby Anderson

I’ve played basketball and programmed computers. But I never thought putting the two together would be a problem. An AI-computer got the facts of a recent NBA playoff all wrong and generated a fictitious story.

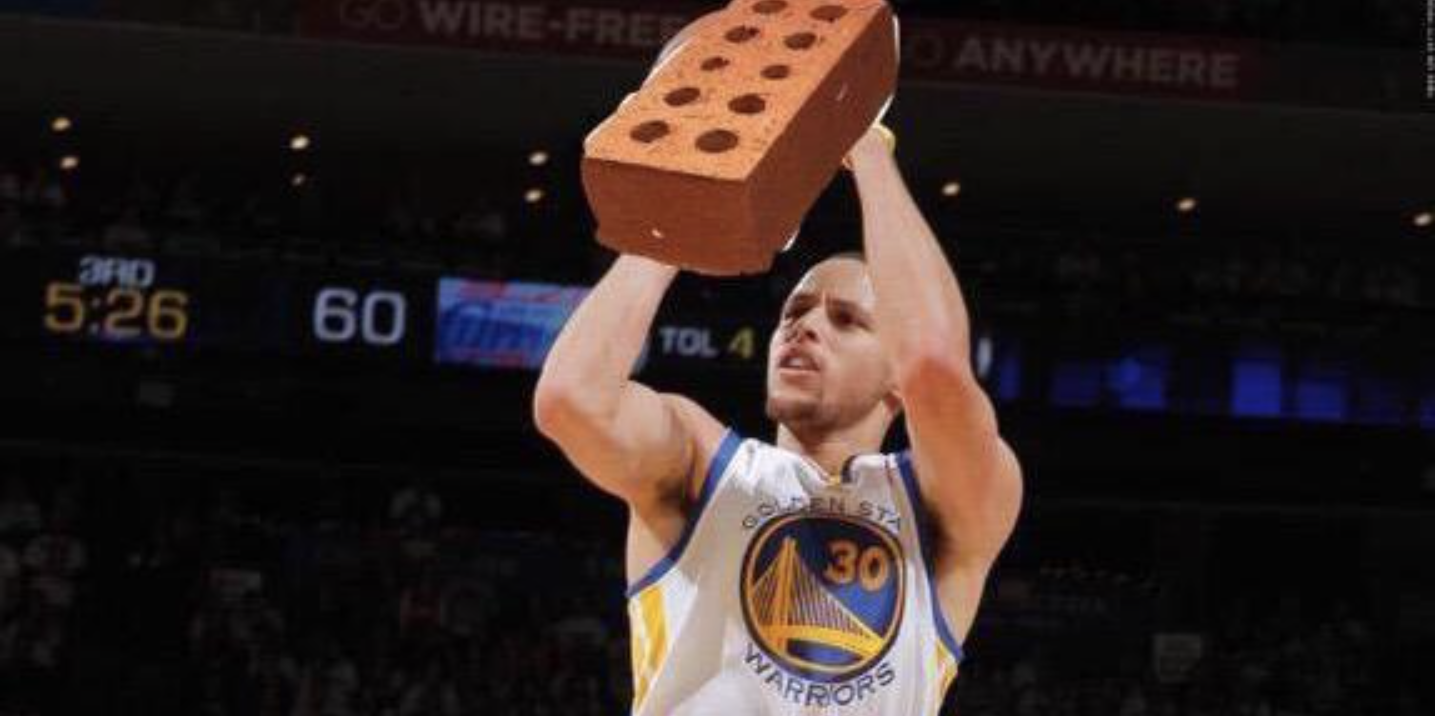

Klay Thompson plays for the Golden State Warriors. Although he is an excellent shooter, he went an abysmal 0-10 from the 3-point line when they played the Sacramento Kings.

Here is the story the AI computer created: “In a bizarre turn of events, NBA star Klay Thompson has been accused of vandalizing multiple houses with bricks in Sacramento…. The incidents have left the community shaken, but no injuries were reported. The motive behind the alleged vandalism remains unclear.”

The AI computer apparently pulled some online comments about Thompson “throwing up bricks” and generated this fictitious story. It illustrates what could happen when AI is unsupervised and out of control.

The story accuses Klay Thompson of doing something he did not do, claims houses were vandalized, and does not know his motive. It also assumes no injuries took place because they weren’t reported. Remember, none of this happened. It was created in the mind (or circuitry) of the AI computer.

Most people who have reported this fictitious story find it funny. I find it scary. The AI computer obviously didn’t know basketball slang (throwing up bricks). But it created a whole story out of a misunderstanding. Let me remind you that whole reputations have been ruined because of a misunderstanding. For that matter, wars have started from a misunderstanding.

This may be a silly story, but it does illustrate that AI isn’t as reliable as we have been led to believe.

Listen Online

Listen Online Watch Online

Watch Online Find a Station in Your Area

Find a Station in Your Area

Listen Now

Listen Now Watch Online

Watch Online